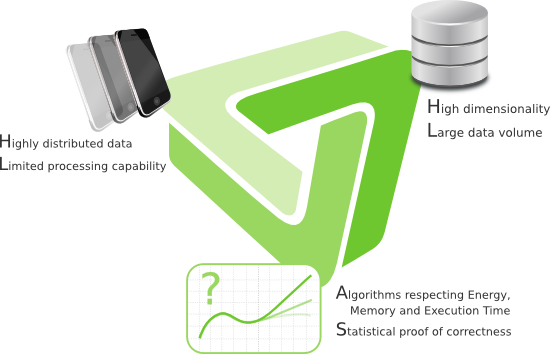

Data is piling up everywhere; everything is measured, logged and tracked. In addition, availability of data is ever increasing. Networked devices and sensors enable accessing the data independently of time and location. The collaborative research center CRC 876 (in German: Sonderforschungsbereich SFB 876) targets the two major challenges originating from this development: Highly distributed data, accessible only on devices with limited processing capability, versus high dimensional data and large data volumes. Both ends of the spectrum share the limitation brought by constrained resources. Classical machine learning algorithms can typically not be applied here without adjustments to incorporate these limitations.

To provide solutions, the available data needs interpretation. The ubiquity of data access faces the demand for similar ubiquity in information access. Intelligent processing near to the data generation side (the small side) eases the analysis of aggregated data due to reduced complexity (the large side).

The CRC's research projects provide flexible solutions for several areas of application. One specific example is car traffic navigation and forecast. State of the art traffic navigation already utilizes several different input parameters in real time, e.g. highway-integrated sensors or cellular network information. In addition, most modern cars provide very fine-granular data about the car's status via the internal electronic control systems. The available data ranges from speed, acceleration up to status of windshield wipers. While this data may help in sophisticated traffic analysis, transmitting the unprocessed data to a central data base generates unacceptable costs and burden for mobile networks. The main task is to select, compress and transmit the locally available data for global information retrieval respecting communication and processing constraints.

Other application scenarios need to deal with resource constraints where the available is already aggregated, but needs to be processed in real time to provide meaningful information. This is extremely relevant in case of medical emergencies. While it is possible to visually capture nano particles like viruses, processing of the resulting video stream and identification of viruses is a time and resource consuming task. Reducing the needed time for recognition and increasing detection accuracy provide a huge improvement for healthcare quality. In consequence, respecting further hardware constraints (available energy, memory and CPU) in the analysis algorithms enables mobile and remote operation of the detection devices directly in the field.

These scenarios are just a small view of several application scenarios. The CRC consists of twelve projects, each with a different focus on providing information by resource-contrained data analysis. The specific fields of research need to cover many parts of the typical data mining process, investigating and enhancing algorithms under resource constraints. Research topics and areas of expertise include:

Data Mining algorithms: Extracting information from pure data has a long history in computer science. This research center concentrates on both sides of the spectrum: distributed data, available only through resource constrained devices, and very high amounts of data. These constraints demand the development of new algorithms and implementations, achieving high accuracy comparable to solutions for smaller problem domains. Depending on the type of problem, trade-offs between overall accuracy and resource consumption need to be included. Stream mining and distributed calculations split the tasks into smaller, easier to handle chunks of data.

Feature selection and creation: In cases where the number of available features is large compared to the number of available examples, an efficient selection of existing features or creation of new/aggregated features is of major importance. Research leads to new algorithms handling this process to improve accuracy and reduce complexity.

Dedicated hardware optimization: The research center's goals for handling large amounts of data in parallel with reduced energy consumption can be targeted with optimizations through dedicated hardware. Two specific fields are General Purpose Computation on Graphics Processing Units (GPGPU) and Field Programmable Gate Arrays (FPGA).

GPGPU: Using the graphics hardware can speed up data analysis through massive parallelization of sub tasks. While the GPU in some cases needs more peek energy compared to tasks running on standard CPUs, the overall consumption for completing the calculation is lower due to the shorter amount of time needed.

FPGA: Similar to GPUs, FPGAs may greatly speed up analysis time by a high level of parallelism. Implementations of data analysis algorithms concentrating on the task at hand in complement to general purpose algorithms deliver faster result generation. Development specialized for FPGAs enables preprocessing and filtering on-site near to sensors and embedded devices. This leads to an efficient reduction of data before it needs to be centralized and stored. The final step transfers the implementation to Application Specific Integrated Circuits (ASICs) for smaller footprints and higher clock rates.

Communication efficiency: The more the data is distributed, the harder it is to gain an holistic view about it or derive global knowledge. Intelligent decisions about what to distribute when is a major optimization task. Data analysis algorithms need to incorporate the costs associated with transmitting data to decide about the pieces of information to be sent between network nodes.

Energy consumption reduction: Energy is an important topic, especially for distributed systems where the amount of available energy is severely limited. Mobile phones, embedded systems or sensors at remote locations typically should run for as long as possible without recharging. Research about the consumption of each part of the system and the demands generated by the analysis algorithms lead to the definition of energy models. The derived models are used for a-priori evaluations about the efficiency of the scheduled analysis executions. Of course, the energy models impact communication behavior as well as consumption of CPUs or GPUs. Finally, energy-aware algorithms are expected to improve consumptions as well for large scale calculations, e.g. in server farms and clusters, as for embedded systems, conserving large amounts of valuable energy.