In this section all historic news regarding the Collaborative Research Center SFB 876 can be explored.

The Collaborative Research Center 876 has investigated machine learning in the interplay of learning theory, algorithms, implementation, and computer architecture. Innovative methods combine high performance with low resource consumption. De Gruyter has now published the results of this new field of research in three comprehensive open access books.

The first volume establishes the foundations of this new field. It goes through all the steps from data collection, their summary and clustering, to the different aspects of resource-aware learning. These aspects include hardware, memory, energy, and communication awareness.

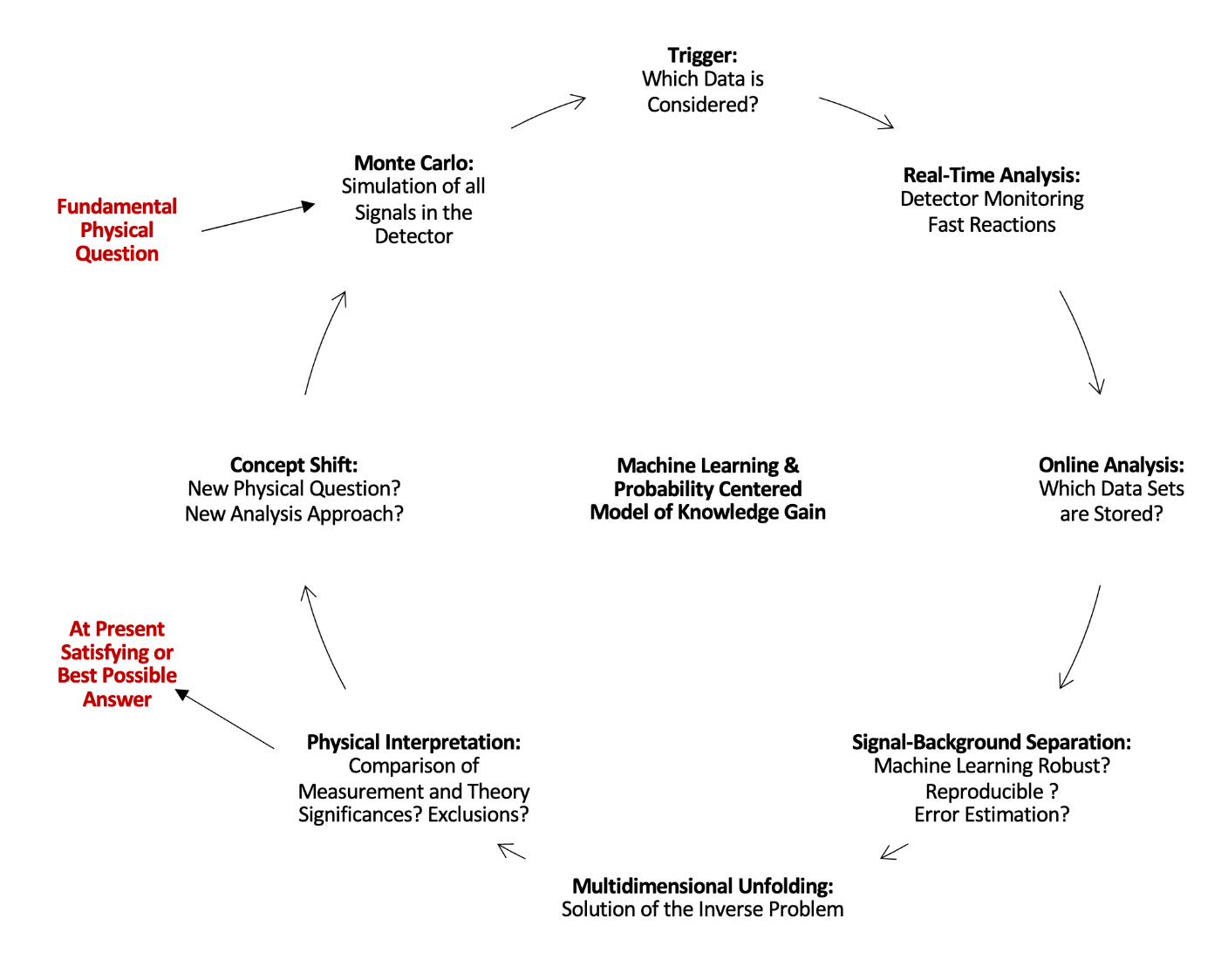

The second volume comprehensively presents machine learning in astroparticle and particle physics. Here, machine learning is necessary not only to process the vast amounts of data and to detect the relevant examples efficiently. It is also a part of the physics knowledge discovery process itself.

The third volume compiles applications in medicine and engineering. Applications of resource-aware machine learning are presented in detail for medicine, industrial production, traffic for smart cities, and communication networks.

All volumes are made for research, but are also excellent textbooks for teaching.

Reliable AI: Successes, Challenges, and Limitations

Abstract - Artificial intelligence is currently leading to one breakthrough after the other, both in public life with, for instance, autonomous driving and speech recognition, and in the sciences in areas such as medical diagnostics or molecular dynamics. However, one current major drawback is the lack of reliability of such methodologies.

In this lecture we will take a mathematical viewpoint towards this problem, showing the power of such approaches to reliability. We will first provide an introduction into this vibrant research area, focussing specifically on deep neural networks. We will then survey recent advances, in particular, concerning generalization guarantees and explainability. Finally, we will discuss fundamental limitations of deep neural networks and related approaches in terms of computability, which seriously affects their reliability.

Bio - Gitta Kutyniok currently holds a Bavarian AI Chair for Mathematical Foundations of Artificial Intelligence at the Ludwig-Maximilians Universität München. She received her Diploma in Mathematics and Computer Science as well as her Ph.D. degree from the Universität Paderborn in Germany, and her Habilitation in Mathematics in 2006 at the Justus-Liebig Universität Gießen. From 2001 to 2008 she held visiting positions at several US institutions, including Princeton University, Stanford University, Yale University, Georgia Institute of Technology, and Washington University in St. Louis, and was a Nachdiplomslecturer at ETH Zurich in 2014. In 2008, she became a full professor of mathematics at the Universität Osnabrück, and moved to Berlin three years later, where she held an Einstein Chair in the Institute of Mathematics at the Technische Universität Berlin and a courtesy appointment in the Department of Computer Science and Engineering until 2020. In addition, Gitta Kutyniok holds an Adjunct Professorship in Machine Learning at the University of Tromso since 2019.

Gitta Kutyniok has received various awards for her research such as an award from the Universität Paderborn in 2003, the Research Prize of the Justus-Liebig Universität Gießen and a Heisenberg-Fellowship in 2006, and the von Kaven Prize by the DFG in 2007. She was invited as the Noether Lecturer at the ÖMG-DMV Congress in 2013, a plenary lecturer at the 8th European Congress of Mathematics (8ECM) in 2021, the lecturer of the London Mathematical Society (LMS) Invited Lecture Series in 2022, and an invited lecturer at both the International Congress of Mathematicians 2022 and the International Congress on Industrial and Applied Mathematics 2023. Moreover, she became a member of the Berlin-Brandenburg Academy of Sciences and Humanities in 2017, a SIAM Fellow in 2019, and a member of the European Academy of Sciences in 2022. In addition, she was honored by a Francqui Chair of the Belgian Francqui Foundation in 2020. She was Chair of the SIAM Activity Group on Imaging Sciences from 2018-2019 and Vice Chair of the new SIAM Activity Group on Data Science in 2021, and currently serves as Vice President-at-Large of SIAM. She is also the spokesperson of the Research Focus "Next Generation AI" at the Center for Advanced Studies at LMU, and serves as LMU-Director of the Konrad Zuse School of Excellence in Reliable AI.

Gitta Kutyniok's research work covers, in particular, the areas of applied and computational harmonic analysis, artificial intelligence, compressed sensing, deep learning, imaging sciences, inverse problems, and applications to life sciences, robotics, and telecommunication.

Graphs in Space: Graph Embeddings for Machine Learning on Complex Data

https://tu-dortmund.zoom.us/j/97562861334?pwd=akg0RTNXZFZJTmlNZE1kRk01a3AyZz09

Abstract - In today’s world, data in graph and tabular form are being generated at astonishing rates, with algorithms for machine learning (ML) and data mining (DM) applied to such data being established as drivers of modern society. The field of graph embedding is concerned with bridging the “two worlds” of graph data (represented with nodes and edges) and tabular data (represented with rows and columns) by providing means for mapping graphs to tabular data sets, thus unlocking the use of a wide range of tabular ML and DM techniques on graphs. Graph embedding enjoys increased popularity in recent years, with a plethora of new methods being proposed. However, up to now none of them addressed the dimensionality of the new data space with any sort of depth, which is surprising since it is widely known that dimensionalities greater than 10–15 can lead to adverse effects on tabular ML and DM methods, collectively termed the “curse of dimensionality.” In this talk we will present the most interesting results of our project Graphs in Space: Graph Embeddings for Machine Learning on Complex Data (GRASP) where we investigated the impact of the curse of dimensionality on graph-embedding methods by using two well-studied artifacts of high-dimensional tabular data: (1) hubness (highly connected nodes in nearest-neighbor graphs obtained from tabular data) and (2) local intrinsic dimensionality (LID – number of dimensions needed to express the complexity around particular points in the data space based on properties of surrounding distances). After exploring the interactions between existing graph-embedding methods (focusing on node2vec), and hubness and LID, we will describe new methods based on node2vec that take these factors into account, achieving improved accuracy in at least one of two aspects: (1) graph reconstruction and community preservation in the new space, and (2) success of applications of the produced tabular data to the tasks of clustering and classification. Finally, we will discuss the potential for future research, including applications to similarity search and link prediction, as well as extensions to graphs that evolve over time.

Bio - Miloš Radovanović is Professor of Computer Science at the Department of Mathematics and Informatics, Faculty of Sciences, University of Novi Sad, Serbia. His research interests span many areas of data mining and machine learning, with special focus on problems related to high data dimensionality, complex networks, time-series analysis, and text mining, as well as techniques for classification, clustering, and outlier detection. He is Managing Editor of the journal Computer Science and Information Systems (ComSIS) and served as PC member for a large number of international conferences including KDD, ICDM, SDM, AAAI and SISAP.

more...

Rethinking of Computing - Memory-Centric or In-Memory Computing

Abstract - Flash memory opens a window of opportunities to a new world of computing over 20 years ago. Since then, storage devices gain their momentum in performance, energy, and even access behaviors. With over 1000 times in performance improvement over storage in recent years, there is another wave of adventure in removing traditional I/O bottlenecks in computer designs. In this talk, I shall first address the opportunities of new system architectures in computing. In particular, hybrid modules of DRAM and non-volatile memory (NVM) and all NVM-based main memory will be considered. I would also comment on a joint management framework of host/CPU and a hybrid memory module to break down the great memory wall by bridging the process information gap between host/CPU and a hybrid memory module. I will then present some solutions in neuromorphic computing which empower memory chips to own new capabilities in computing. In particular, I shall address challenges in in-memory computing in application co-designs and show how to utilize special characteristics of non-volatile memory in deep learning.

Bio - Prof. Kuo received his B.S.E. and Ph.D. degrees in Computer Science from National Taiwan University and University of Texas at Austin in 1986 and 1994, respectively. He is now Distinguished Professor of Department of Computer Science and Information Engineering of National Taiwan University, where he was an Interim President (2017.10-2019.01) and an Executive Vice President for Academics and Research (2016.08-2019.01). Between August 2019 and July 2022, Prof. Kuo took a leave to join City University of Hong Kong as Lee Shau Kee Chair Professor of Information Engineering, Advisor to President (Information Technology), and Founding Dean of College of Engineering. His research interest includes embedded systems, non-volatile-memory software designs, neuromorphic computing, and real-time systems.

Dr. Kuo is Fellow of ACM, IEEE, and US National Academy of Inventors. He is also a Member of European Academy of Sciences and Arts. He is Vice Chair of ACM SIGAPP and Chair of ACM SIGBED Award Committee. Prof. Kuo received numerous awards and recognition, including Humboldt Research Award (2021) from Alexander von Humboldt Foundation (Germany), Outstanding Technical Achievement and Leadership Award (2017) from IEEE Technical Committee on Real-Time Systems, and Distinguished Leadership Award (2017) from IEEE Technical Committee on Cyber-Physical Systems. Prof. Kuo is the founding Editor-in-Chief of ACM Transactions on Cyber-Physical Systems (2015-2021) and a program committee member of many top conferences. He has over 300 technical papers published in international journals and conferences and received many best paper awards, including the Best Paper Award from ACM/IEEE CODES+ISSS 2019 and 2022 and ACM HotStorage 2021.

more...

The interdisciplinary research area FAIR (together with members of project C4) organizes a two-day workshop on Sequence and Streaming Data Analysis.

This will take place

The goal of the workshop is to provide a basic understanding of similarity measures and classification and clustering algorithms for sequence data and data streams.

We welcome as presenting guests:

Registration is required. More information at:

more...

Data Considerations for Responsible Data-Driven Systems

Abstract - Data-driven systems collect, process and generate data from user interactions. To ensure these processes are responsible, we constrain them with a variety of social, legal, and ethical norms. In this talk, I will discuss several considerations for responsible data governance. I will show how responsibility concepts can be operationalized and highlight the computational and normative challenges that arise when these principles are implemented in practice.

Short bio - Asia J. Biega is a tenure-track faculty member at the Max Planck Institute for Security and Privacy (MPI-SP) leading the Responsible Computing group. Her research centers around developing, examining and computationally operationalizing principles of responsible computing, data governance & ethics, and digital well-being. Before joining MPI-SP, Asia worked at Microsoft Research Montréal in the Fairness, Accountability, Transparency, and Ethics in AI (FATE) Group. She completed her PhD in Computer Science at the MPI for Informatics and the MPI for Software Systems, winning the DBIS Dissertation Award of the German Informatics Society. In her work, Asia engages in interdisciplinary collaborations while drawing from her traditional CS education and her industry experience including stints at Microsoft and Google.

more...

At the SISAP 2022 conference at the University of Bologna, Lars Lenssen (SFB876, Project A2) won the "best student paper" award for the contribution "Lars Lenssen, Erich Schubert. Clustering by Direct Optimization of the Medoid Silhouette. In: Similarity Search and Applications. SISAP 2022. https://doi.org/10.1007/978-3-031-17849-8_15".

The publisher Springer donates a monetary prize for the awards, and the best contributions are invited to submit an extended version to a special issue of the A* journal "Information Systems".

In this paper, we introduce a new clustering method that directly optimizes the Medoid Silhouette, a variant of the popular Silhouette measure of clustering quality. As the new variant is O(k²) times faster than previous approaches, we can cluster data sets larger by orders of magnitude, where large values of k are desirable. The implementation is available in the Rust "kmedoids" crate and the Python module "kmedoids", the code is open source on Github.

The group is successful for the second time: In 2020, Erik Thordsen won the award with the contribution "Erik Thordsen, Erich Schubert. ABID: Angle Based Intrinsic Dimensionality. In: Similarity Search and Applications. SISAP 2020. https://doi.org/10.1007/978-3-030-60936-8_17".

This paper introduced a new angle-based estimator of the intrinsic dimensionality – a measure of local data complexity – traditionally estimated solely from distances.

Causal and counterfactual views of missing data models

Abstract - Modern cryptocurrencies, which are based on a public permissionless blockchain (such as Bitcoin), face tremendous scalability issues: With their broader adoption, conducting (financial) transactions within these systems becomes slow, costly, and resource-intensive. The source of these issues lies in the proof-of-work consensus mechanism that - by design - limits the throughput of transactions in a blockchain-based cryptocurrency. In the last years, several different approaches emerged to improve blockchain scalability. Broadly, these approaches can be categorized into solutions that aim at changing the underlying consensus mechanism (so-called layer-one solutions), and such solutions that aim to minimize the usage of the expensive blockchain consensus by off-loading blockchain computation to cryptographic protocols operating on top of the blockchain (so-called layer-two solutions). In this talk, I will overview the different approaches to improving blockchain scalability and discuss in more detail the workings of layer-two solutions, such as payment channels and payment channel networks.

Short bio - Clara Schneidewind is a Research Group Leader at the Max Planck Institute for Security and Privacy in Bochum. In her research, she aims to develop solutions for the meaningful, secure, resource-saving, and privacy-preserving usage of blockchain technologies. She completed her Ph.D. at the Technical University of Vienna in 2021. In 2019, she was a visiting scholar at the University of Pennsylvania. Since 2021 she leads the Heinz Nixdorf research group for Cryptocurrencies and Smart Contracts at the Max Planck Institute for Security and Privacy funded by the Heinz Nixdorf Foundation.

more...

Causal and counterfactual views of missing data models

Abstract - It is often said that the fundamental problem of causal inference is a missing data problem -- the comparison of responses to two hypothetical treatment assignments is made difficult because for every experimental unit only one potential response is observed. In this talk, we consider the implications of the converse view: that missing data problems are a form of causal inference. We make explicit how the missing data problem of recovering the complete data law from the observed data law can be viewed as identification of a joint distribution over counterfactual variables corresponding to values had we (possibly contrary to fact) been able to observe them. Drawing analogies with causal inference, we show how identification assumptions in missing data can be encoded in terms of graphical models defined over counterfactual and observed variables. We note interesting similarities and differences between missing data and causal inference theories. The validity of identification and estimation results using such techniques rely on the assumptions encoded by the graph holding true. Thus, we also provide new insights on the testable implications of a few common classes of missing data models, and design goodness-of-fit tests around them.

Short bio - Razieh Nabi is a Rollins Assistant Professor in the Department of Biostatistics and Bioinformatics at Emory Rollins School of Public Health. Her research is situated at the intersection of machine learning and statistics, focusing on causal inference and its applications in healthcare and social justice. More broadly, her work spans problems in causal inference, mediation analysis, algorithmic fairness, semiparametric inference, graphical models, and missing data. She has received her PhD (2021) in Computer Science from Johns Hopkins University.

Relevant papers:

At this year's ECML-PKDD (European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases), Andreas Roth and Thomas Liebig (both SFB 876 - B4) received the "Best Paper Award". In their paper "Transforming PageRank into an Infinite-Depth Graph Neural Network" they addressed a weakness of graph neural networks (GNNs). In GNNs, graph convolutions are used to determine appropriate representations for nodes that are supposed to link node features to context within a graph. If graph convolutions are performed multiple times in succession, the individual nodes within the graph lose information instead of benefiting from increased complexity. Since PageRank itself exhibits a similar problem, a long-established variant of PageRank is transformed into a Graph Neural Network. The intuitive derivation brings both theoretical and empirical advantages over several variants that have been widely used so far.

more...

Managing Large Knowledge Graphs: Techniques for Completion and Federated Querying

Abstract - Knowledge Graphs (KGs) allow for modeling inter-connected facts or statements annotated with semantics, in a semi-structured way. Typical applications of KGs include knowledge discovery, semantic search, recommendation systems, question answering, expert systems, and other AI tasks. In KGs, concepts and entities correspond to labeled nodes, while directed, labeled edges model their connections, creating a graph. Following the Linked Open Data initiatives, thousands of KGs have been published on the web represented with the Resource Description Framework (RDF) and queryable with the SPARQL language through different web services or interfaces. In this talk, we will address two relevant problems when managing large KGs. First, we will address the problem of KG completion, which is concerned with completing missing statements in the KG. We will focus on the task of entity type prediction and present an approach using Restricted Boltzmann Machines to learn the latent distribution of labeled edges for the entities in the KG. The solution implements a neural network architecture to predict entity types based on the learned representation. Experimental evidence shows that resulting representations of entities are much more separable with respect to their associations with classes in the KG, compared to existing methods. In the second part of this talk, we will address the problem of federated querying, which requires access to multiple, decentralized and autonomous KG sources. Due to advancements in technologies for publishing KGs on the web, sources can implement different interfaces which differ in their querying expressivity. I will present an interface-aware framework that exploits the capabilities of the member of the federations to speed up the query execution. The results over the FedBench benchmark with large KGs show a substantial improvement in performance by devising our interface-aware approach that exploits the capabilities of heterogeneous interfaces in federations. Finally, this talk will summarize further contributions of our work related to the problem of managing large KGs and conclude with an outlook to future work.

Short bio - Maribel Acosta is an Assistant Professor at the Ruhr-University Bochum, Germany, where she is the Head of the Database and Information Systems Group and a member of the Institute for Neural Computation (INI). Her research interests include query processing over decentralized knowledge graphs and knowledge graph quality with a special focus on completeness. More recently, she has applied Machine Learning approaches to these research topics. Maribel conducted her bachelor and master studies in Computer Science at the Universidad Simon Bolivar, Venezuela. In 2017, she finished her Ph.D. at the Karlsruhe Institute of Technology, Germany, where she was also a Postdoc and Lecturer until 2020. She is an active member of the (Semantic) Web and AI communities, and has acted as Research Track Co-chair (ESWC, SEMANTiCS) and reviewer of top conferences (WWW, AAAI, ICML, NEURIPS, ISWC, ESWC).

https://tu-dortmund.zoom.us/j/91486020936?pwd=bkxEdVZoVE5JMXNzRDJTdDdDZDRrZz09

more...

From September 12-16, 2022, the Collaborative Research Center 876 (CRC 876) at TU Dortmund University hosted its 6th International Summer School 2022 on Resource-aware Machine Learning. In 14 different lectures, the hybrid event allowed the approximately 70 participants present on site and more than 200 registered remote participants to enhance their skills in data analysis (machine learning, data mining, statistics), embedded systems, and applications of the demonstrated analysis techniques. The lectures were given by international experts in these research fields and covered topics such as Deep Learning on FPGAs, efficient Federated Learning, Machine Learning without power consumption, or generalization in Deep Learning.

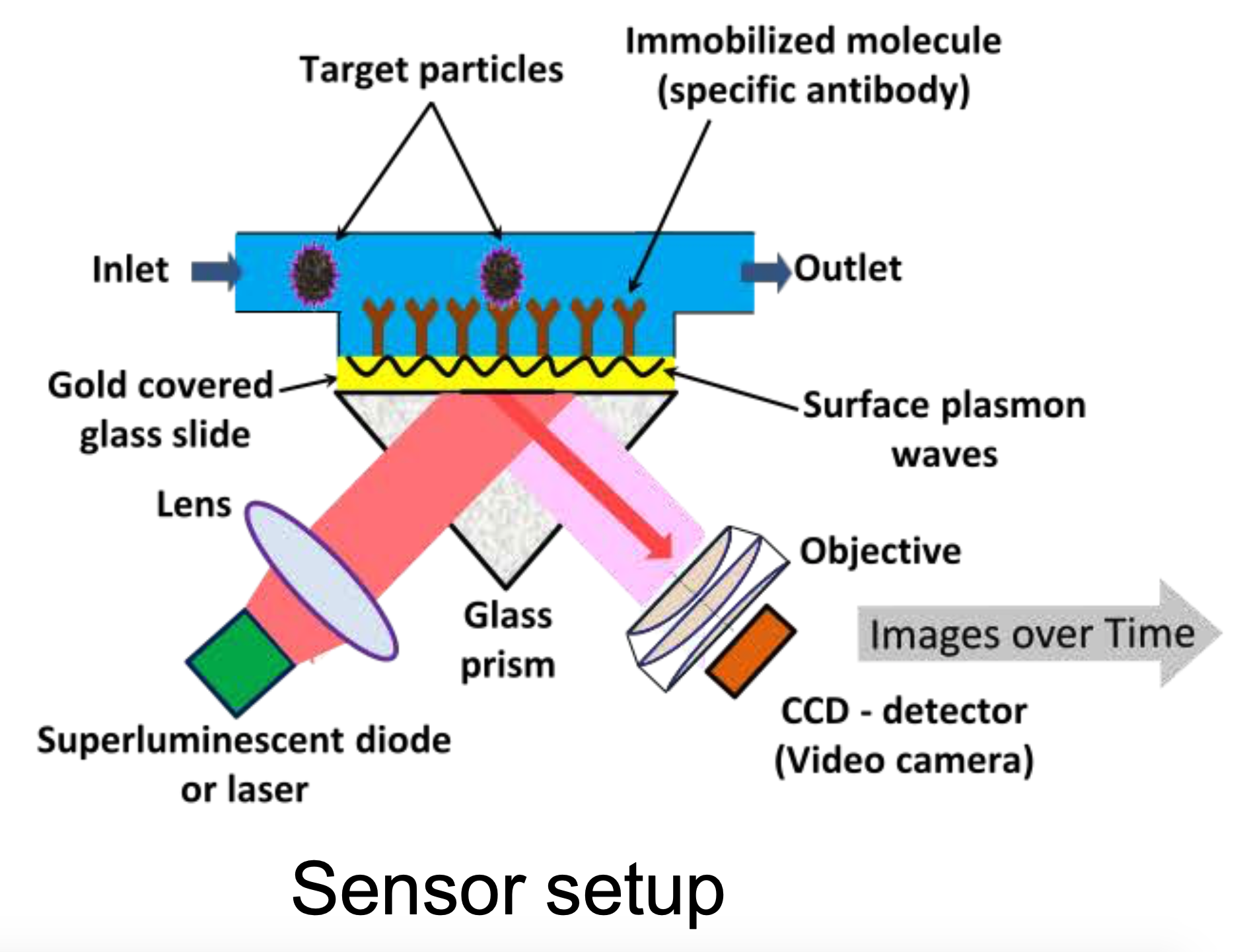

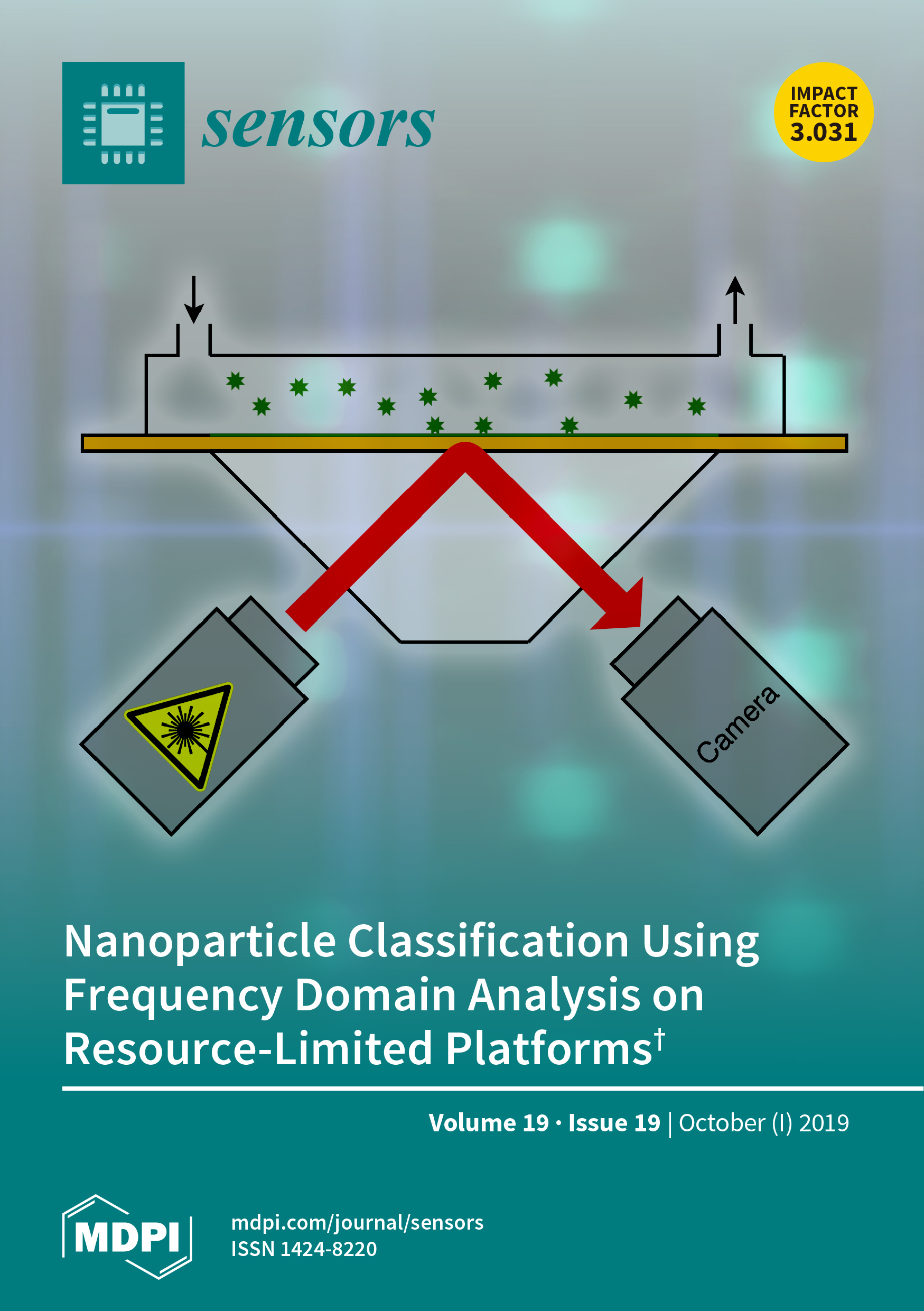

The on-site participants of the Summer School were CRC 876 members and international guests from eleven different countries. In the Student's Corner of the Summer School - an extended coffee break with poster presentations - they presented their research to each other, exchanged ideas and networked with each other. The Summer School’s hackathon put the participants' practical knowledge of machine learning to the test. In light of the current COVID-19 pandemic, participants were tasked with identifying virus-like nanoparticles using a plasmon-based microscopy sensor in a real-world data analysis scenario. The sensor and the analysis of its data are part of the research work of CRC 876. The goal of the analysis task was to detect samples with virus-like particles and to determine the viral load on an embedded system under resource constraints.

Details and information about the Summer School can be found at: https://sfb876.tu-dortmund.de/summer-school-2022/

Detection and validation of circular DNA fragments by finding plausible paths in a graph representation

Abstract - The presence of extra-chromosomal circular DNA in tumor cells has been acknowledged to be a marker of adverse effects across various cancer types. Detection of such circular fragments is potentially useful for disease monitoring.

Here we present a graph-based approach to detecting circular fragments from long-read sequencing data.

We demonstrate the robustness of the approach by recovering both known circles (such as the mitochondrion) as well as simulated ones.

Biographies:

Alicia Isabell Tüns did her bachelor's degree in biomedical engineering at the University of Applied Sciences Hamm-Lippstadt in 2016. She finished her master's degree in medical biology at the University of Duisburg-Essen in 2018. Since March 2019, she has been working as a Ph.D. student in the biology faculty at the University of Duisburg-Essen. Her research focuses on detecting molecular markers of relapse in lung cancer using nanopore sequencing technology.

Till Hartmann obtained his master's degree in computer science at TU Dortmund in 2017 and has been working as a Ph.D. student in the Genome Informatics group at the Institute of Human Genetics, University Hospital of Essen since then.

more...

CRC 876 Board member, Head of the Research Training Group, and Project Leader in Subproject C3, Prof. Dr. Dr. Wolfgang Rhode, will receive an honorary professorship from the Ruhr University Bochum (RuB) on May 30, 2022. The open event as part of the Physics Colloquium, organized by the Faculty of Physics and Astronomy at RuB, will take place in a hybrid format starting at 12 pm (CEST). The awarding of the honorary professorship will be accompanied by a laudation by Prof. Dr. Reinhard Schlickeiser (RuB). Registration is not required.

The event at a glance:

When: May 30, 2022, 12 pm (CEST) c.t.

Where: Ruhr University Bochum, Faculty of Physics and Astronomy

Universitätsstraße 150

Lecture hall H-NB

Online: Zoom

Prof. Dr. Dr. Wolfgang Rhode holds the professorship for Experimental Physics - Astroparticle Physics at TU Dortmund University. He is involved in the astroparticle experiments AMANDA, IceCube, MAGIC, FACT and CTA and does research in radio astronomy. His focus is on data analysis and the development of Monte Carlo methods as developed in CRC 876. Building on a long-standing collaboration with Katharina Morik on Machine Learning in astroparticle physics within CRC 876, both became co-founders of the DPG working group "Physics, Modern Information Technology and Artificial Intelligence" in 2017. Wolfgang Rhode is deputy speaker of the Collaborative Research Center 1491 - Cosmic Interacting Matters at the Ruhr University Bochum.

more...

The next step of energy-driven computer architecture devoment: In- and near-memory computing

Abstract - The development in the last two decades on the computer architecture side was primarily driven by energy aspects. Of course, the primary goal was to provide more compute performance but since the end of Dennard scaling this was only achievable by reducing the energy requirements in processing, moving and storing of data. This leaded to the development from single-core to multi-core, many-core processors, and increased use of heterogeneous architectures in which multi-core CPUs are co-operating with specialized accelerator cores. The next in this development are near- and in-memory computing concepts, which reduce energy-intensive data movements.

New non-volatile, so-called memristive, memory elements like Resistive RAMs (ReRAMs), Phase Change Memories (PCMs), Spin-torque Magnetic RAMS (STT-MRAMs) or devices with ferroelectric tunnel junctions (FTJs) play a decisive role in this context. They are not only predestined for low power reading but also for processing. In this sense they are devices which can be used in principle for storing and processing. Furthermore, such elements offer multi-bit capability that supports known but due to a so far lack of appropriate technology not realised ternary arithmetic. Furthermore, they are attractive for the use in low-power quantized neural networks. These benefits are opposed by difficulties in writing such elements expressed by low endurance and higher power requirements in writing compared to conventional SRAM or DRAM technology.

These pro and cons have to be carefully weighed against each other during computer design. In the talk wil be presented corresponding architectures examples which were developed by the group of the author and in collaboration work with others. The result of this research brought, e.g. mixed-signal neuromorphic architectures as well as ternary compute circuits for future low-power near- and in-memory computing architectures.

Biographie - Dietmar Fey is a Full Professor of Computer Science with Friedrich-Alexander-University Erlangen-Nürnberg (FAU). After his study in computer science at FAU he received his doctorate with a thesis in the field of Optical Computing in 1992 also at FAU. He was an Associate Professor from 2001 to 2009 for Computer Engineering with University Jena. Since 2009 he leads the Chair for Computer Architecture at FAU. His research interests are in parallel computer architectures, memristive computing, and embedded systems. He authored or co-authored more than 160 papers in proceedings and journals and published three books. Recently, he was involved in the establishing of a DFG priority program about memristive computing and in a research competition project awarded by the BMBF using memristive technology in deep neural networks.

Project C3 is proud to announce their "Workshop on Machine Learning for Astroparticle Physics and Astronomy" (ml.astro), co-located with INFORMATIK 2022.

The workshop will be held on September 26th 2022 in Hamburg, Germany and include invited as well as contributed talks. Contributions should be submitted as full papers of 6 to 10 pages until April 30th 2022 and may include, without being limited to, the following topics:

Machine learning applications in astroparticle physics and astronomy; Unfolding / deconvolution / quantification; Neural networks and graph neural networks (GNNs); Generative adversarial networks (GANs); Ensemble Methods; Unsupervised learning; Unsupervised domain adaptation; Active class selection; Imbalanced learning; Learning with domain knowledge; Particle reconstruction, tracking, and classification; Monte Carlo simulations Further information on the timeline and the submission of contributions is provided via the workshop website: https://sfb876.tu-dortmund.de/ml.astro/

Machine learning applications in astroparticle physics and astronomy; Unfolding / deconvolution / quantification; Neural networks and graph neural networks (GNNs); Generative adversarial networks (GANs); Ensemble Methods; Unsupervised learning; Unsupervised domain adaptation; Active class selection; Imbalanced learning; Learning with domain knowledge; Particle reconstruction, tracking, and classification; Monte Carlo simulations Further information on the timeline and the submission of contributions is provided via the workshop website: https://sfb876.tu-dortmund.de/ml.astro/

Predictability, a predicament?

Abstract - In the context of AI in general and Machine Learning in particular, predictability is usually considered a blessing. After all – that is the goal: build the model that has the highest predictive performance. The rise of ‘big data’ has in fact vastly improved our ability to predict human behavior thanks to the introduction of much more fine grained and informative features. However, in practice things are more complicated. For many applications, the relevant outcome is observed for very different reasons. In such mixed scenarios, the model will automatically gravitate to the one that is easiest to predict at the expense of the others. This even holds if the more predictable scenario is by far less common or relevant. We present a number of applications across different domains where the availability of highly informative features can have significantly negative impacts on the usefulness of predictive modeling and potentially create second order biases in the predictions. Neither model transparency nor first order data de-biases are ultimately able to mitigate those concerns. The moral imperative of those effects is that as creators of machine learning solutions it is our responsibility to pay attention to the often subtle symptoms and to let our human intuition be the gatekeeper when deciding whether models are ready to be released 'into the wild'.

Short bio - Claudia Perlich started her career at the IBM T.J. Watson Research Center, concentrating on research and application of Machine Learning for complex real-world domains and applications. From 2010 to 2017 she acted as the Chief Scientist at Dstillery where she designed, developed, analyzed, and optimized machine learning that drives digital advertising to prospective customers of brands. Her latest role is Head of Strategic Data Science at TwoSigma where she is creating quantitative strategies for both private and public investments. Claudia continues to be an active public speaker, has over 50 scientific publications, as well as numerous patents in the area of machine learning. She has won many data mining competitions and best paper awards at Knowledge Discovery and Data Mining (KDD) conference, where she served as the General Chair in 2014. Claudia is the past winner of the Advertising Research Foundation’s (ARF) Grand Innovation Award and has been selected for Crain’s New York’s 40 Under 40 list, Wired Magazine’s Smart List, and Fast Company’s 100 Most Creative People. She acts as an advisor to a number of philanthropic organizations including AI for Good, Datakind, Data and Society and others. She received her PhD in Information Systems from the NYU Stern School of Business where she continues to teach as an adjunct professor.

more...

The Chair VIII of the Faculty of Computer Science has an immediate vacancy for a student assistant (SHK / WHF). The number of hours can be discussed individually. The offer is aimed at students of computer science who have completed their studies with very good results.

You can find more information about the positions and your application when clicking "mehr".

more...

Reconciling knowledge-based and data-driven AI for human-in-the-loop machine learning

Abstract - For many practical applications of machine learning it is appropriate or even necessary to make use of human expertise to compensate a too small amount or low quality of data. Taking into account knowledge which is available in explicit form reduces the amount of data needed for learning. Furthermore, even if domain experts cannot formulate knowledge explicitly, they typically can recognize and correct erroneous decisions or actions. This type of implicit knowledge can be injected into the learning process to guide model adapation. In the talk, I will introduce inductive logic programming (ILP) as a powerful interpretable machine learning approach which allows to combine logic and learning. In ILP domain theories, background knowledge, training examples, and the learned model are represented in the same format, namely Horn theories. I will present first ideas how to combine CNNS and ILP into a neuro-symbolic framework. Afterwards, I will address the topic of explanatory AI. I will argue that, although ILP-learned models are symbolic (white-box), it might nevertheless be necessary to explain system decisions. Depending on who needs an explanation for what goal in which situation, different forms of explanations are necessary. I will show how ILP can be combined with different methods for explanation generation and propose a framework for human-in-the-loop learning. There, explanations are designed to be mutual -- not only from the AI system for the human but also the other way around. The presented approach will be illustrated with different application domains from medical diagnostics, file management, and quality control in manufacturing.

Short CV - Ute Schmid is a professor of Applied Computer Science/Cognitive Systems at University of Bamberg since 2004. She received university diplomas both in psychology and in computer science from Technical University Berlin (TUB). She received her doctoral degree (Dr.rer.nat.) in computer science in 1994 and her habilitation in computer science in 2002 from TUB. From 1994 to 2001 she was assistant professor at the Methods of AI/Machine Learning group, Department of Computer Science, TUB. After a one year stay as DFG-funded researcher at Carnegie Mellon University, she worked as lecturer for Intelligent Systems at the Department of Mathematics and Computer Science at University Osnabrück and was member of the Cognitive Science Institute. Ute Schmid is member of the board of directors of the Bavarian Insistute of Digital Transformation (bidt) and a member of the Bavarian AI Council (Bayerischer KI-Rat). Since 2020 she is head of the Fraunhofer IIS project group Comprehensible AI (CAI). Ute Schmid dedicates a significant amount of her time to measures supporting women in computer science and to promote computer science as a topic in elementary, primary, and secondary education. She won the Minerva Award of Informatics Europe 2018 for her university. Since many years, Ute Schmid is engaged in educating the public about artificial intelligence in general and machine learning and she gives workshops for teachers as well as high-school students about AI and machine learning. For her outreach activities she has been awarded with the Rainer-Markgraf-Preis 2020.

more...

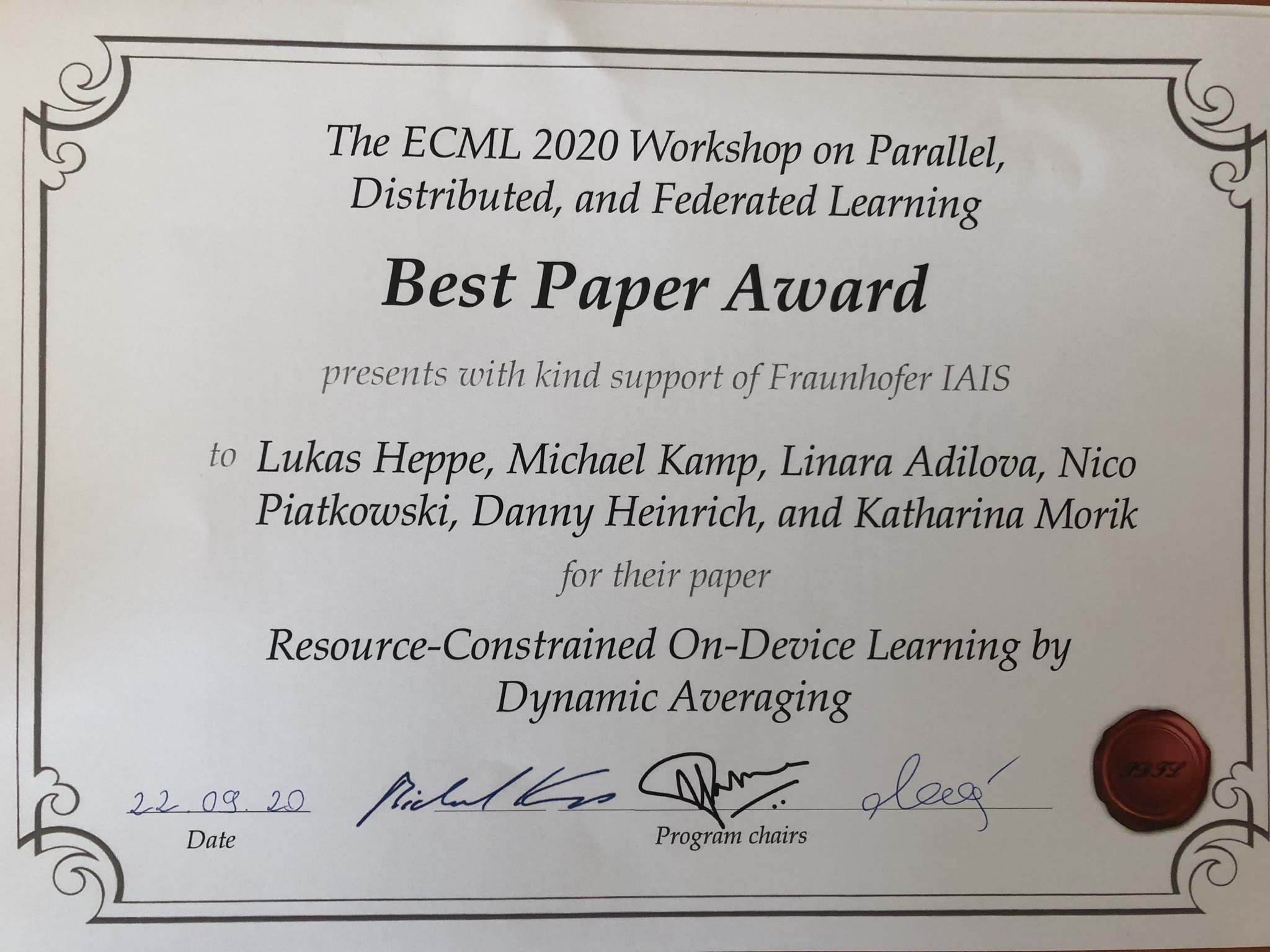

Trustworthy Federated Learning

Abstract - Data science is taking the world by storm, but its confident application in practice requires that the methods used are effective and trustworthy. This was already a difficult task when data fit onto a desktop computer, but becomes even harder now that data sources are ubiquitous and inherently distributed. In many applications (such as autonomous driving, industrial machines, or healthcare) it is impossible or hugely impractical to gather all their data into one place, not only because of the sheer size but also because of data privacy. Federated learning offers a solution: models are trained only locally and combined to create a well-performing joint model - without sharing data. However, this comes at a cost: unlike classical parallelizations, the result of federated learning is not the same as centralized learning on all data. To make this approach trustworthy, we need to guarantee high model quality (as well as robustness to adversarial examples); this is challenging in particular for deep learning where satisfying guarantees cannot even be given for the centralized case. Simultaneously ensuring data privacy and maintaining effective and communication-efficient training is a huge undertaking. In my talk I will present practically useful and theoretically sound federated learning approaches, and show novel approaches to tackle the exciting open problems on the path to trustworthy federated learning.

Biographie - I am leader of the research group "Trustworthy Machine Learning" at the Institut für KI in der Medizin (IKIM), located at the Ruhr-University Bochum. In 2021 I was a postdoctoral researcher at the CISPA Helmholtz Center for Information Security in the Exploratory Data Analysis group of Jilles Vreeken. From 2019 to 2021 I was a postdoctoral research fellow at Monash University, where I am still an affiliated researcher. From 2011 to 2019 I was a data scientist at Fraunhofer IAIS, where I lead Fraunhofer’s part in the EU project DiSIEM, managing a small research team. Moreover, I was a project-specific consultant and researcher, e.g., for Volkswagen, DHL, and Hussel, and I designed and gave industrial trainings. Since 2014 I was simultaneously a doctoral researcher at the University of Bonn, teaching graduate labs and seminars, and supervising Master’s and Bachelor’s theses. Before that, I worked for 10 years as a software developer.

more...

We are pleased to announce that Dr. Andrea Bommert has received the TU Dortmund University Dissertation Award. She completed her dissertation entitled "Integration of Feature Selection Stability in Model Fitting", with distinction (summa cum laude) earlier this year on January 20, 2021. The dissertation award would have been presented to her on Dec. 16, 2021, during this year's annual academic celebration, but the annual celebration had to be cancelled due to the Corona pandemic.

In her work, Andrea Bommert developed measures for assessing variable selection stability as well as strategies for fitting good models using variable selection stability and successfully applied them. She is a research associate at the Department of Statistics and a member of the Collaborative Research Center 876 (Project A3).

We congratulate her on this year's dissertation award of the TU Dortmund University!

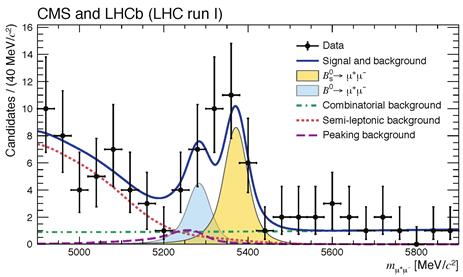

Bernhard Spaan, 25.04.1960 - 9.12.2021, Professor for Experimental Physics at TU Dortmund University since 2004, part of the LHCb collaboration at CERN since the beginning, member of the board of the Dortmund Data Science Center, from the beginning project leader in SFB 876 "C5 Real-Time Analysis and Storage for High-Volume Data from Particle Physics" together with Jens Teubner.

The data acquisition of the large experiments, such as LHCb, is done with devices. And hence Bernhard Spaan even realized data analysis with devices at first. The algorithmic side in addition to statistics and physical experimentation was added by machine learning. He was always concerned with the fundamental questions about the universe, especially about antimatter. He once told me that I could achieve something important with my methods after all, namely physical knowledge. He advanced this knowledge with many collaborations and also pursued it in the SFB 876.

Bernhard Spaan has also championed physics and data analysis in collegial cooperation across faculty boundaries. Together we wrote down a credo on interdisciplinary "Big Volume Data Driven Science" at TU Dortmund. His warm-hearted solidarity with all those who are committed to the university has made the free exchange of ideas among colleagues easy. The long evening of Ursula Gather's election as rector was also very impressive: we stood in the stairwell and Bernhard had a tablet on which we could watch the BVB game that was taking place. When DoDSC was founded, we went to the stadium to celebrate and were lucky enough to see a sensational 4-0 BVB victory. Bernhard exuded so much joie de vivre, combining academics, good wine, sports, and creating academic life together. It is hard to comprehend that his life has now already come to an end.

His death is a great loss for the whole SFB 876, he is missed.

In deep mourning

Katharina Morik

We are pleased to announce that Pierre Haritz (TU Dortmund), Helena Kotthaus (ML2R), Thomas Liebig (SFB 876 - B4) and Lukas Pfahler (SFB 876 - A1) have received the "Best Paper Award" for the paper "Self-Supervised Source Code Annotation from Related Research Papers" at the IEEE ICDM PhD Forum 2021.

To increase the understanding and reusability of third-party source code, the paper proposes a prototype tool based on BERT models. The underlying neural network learns common structures between scientific publications and their implementations based on variables occurring in the text and source code, and will be used to annotate scientific code with information from the respective publication.

more...

Responsible continual learning

Abstract - Lifelong learning from non-stationary data streams remains a long-standing challenge for machine learning as incremental learning might lead to catastrophic forgetting or interference. Existing works mainly focus on how to retain valid knowledge learned thus far without hindering learning new knowledge and refining existing knowledge when necessary. Despite the strong interest on responsible AI, including aspects like fairness, explainability etc, such aspects are not yet addressed in the context of continual learning.

Biographie - Eirini Ntoutsi is a professor for Artificial Intelligence at the Free University (FU) Berlin. Prior to that, she was an associate professor of Intelligent Systems at the Leibniz University of Hanover (LUH), Germany. Before that, she was a post-doctoral researcher at the Ludwig-Maximilians-University (LMU) in Munich. She holds a Ph.D. from the University of Piraeus, Greece, and a master's and diploma in Computer Engineering and Informatics from the University of Patras, Greece. Her research lies in the fields of Artificial Intelligence (AI) and Machine Learning (ML) and aims at designing intelligent algorithms that learn from data continuously following the cumulative nature of human learning while mitigating the risks of the technology and ensuring long-term positive social impact. However responsibility aspects are even more important in such a setting. In this talk, I will cover some of these aspects, namely fairness w.r.t. some protected attribute(s), explainability of model decisions and unlearning due to e.g., malicious instances.

more...

Algorithmic recourse: from theory to practice

Abstract - In this talk I will introduce the concept of algorithmic recourse, which aims to help individuals affected by an unfavorable algorithmic decision to recover from it. First, I will show that while the concept of algorithmic recourse is strongly related to counterfactual explanations, existing methods for the later do not directly provide practical solutions for algorithmic recourse, as they do not account for the causal mechanisms governing the world. Then, I will show theoretical results that prove the need of complete causal knowledge to guarantee recourse and show how algorithmic recourse can be useful to provide novel fairness definitions that short the focus from the algorithm to the data distribution. Such novel definition of fairness allows us to distinguish between situations where unfairness can be better addressed by societal intervention, as opposed to changes on the classifiers. Finally, I will show practical solutions for (fairness in) algorithmic recourse, in realistic scenarios where the causal knowledge is only limited.

Biographie - I am a full Professor on Machine Learning at the Department of Computer Science of Saarland University in Saarbrücken (Germany), and Adjunct Faculty at MPI for Software Systems in Saarbrücken (Germany). I am a fellow of the European Laboratory for Learning and Intelligent Systems ( ELLIS), where I am part of the Robust Machine Learning Program and of the Saarbrücken Artificial Intelligence & Machine learning (Sam) Unit. Prior to this, I was an independent group leader at the MPI for Intelligent Systems in Tübingen (Germany) until the end of the year. I have held a German Humboldt Post-Doctoral Fellowship, and a “Minerva fast track” fellowship from the Max Planck Society. I obtained my PhD in 2014 and MSc degree in 2012 from the University Carlos III in Madrid (Spain), and worked as postdoctoral researcher at the MPI for Software Systems (Germany) and at the University of Cambridge (UK).

more...

Resource-Constrained and Hardware-Accelerated Machine Learning

Abstract - The resource and energy consumption of machine learning is the major topic of the collaborative research center. We are often concerned with the runtime and resource consumption of model training, but little focus is set on the application of trained ML models. However, the continuous application of ML models can quickly outgrow the resources required for its initial training and also inference accuracy. This seminar presents the recent research activities in the A1 project in the context of resource-constrained and hardware-accelerated machine learning. It consists of three parts contributed by Sebastian Buschjaeger (est. 30 min), Christian Hakert (est. 15 min), and Mikail Yayla (est. 15 min).

Talks:

FastInference - Applying Large Models on Small Devices

Speaker: Sebastian Buschjaeger

Abstract: In the first half of my talk I will discuss ensemble pruning and leaf-refinement as approaches to improve the accuracy-resource trade-off of Random Forests. In the second half I will discuss the FastInference tool which combines these optimizations with the execution of models into a single framework.

Gardening Random Forests: Planting, Shaping, BLOwing, Pruning, and Ennobling

Speaker: Christian Hakert

Abstract: While keeping the tree structure untouched, we reshape the memory layout of random forest ensembles. By exploiting architectural properties, as for instance CPU registers, caches or NVM latencies, we multiply the speed for random forest inference without changing their accuracy.

Error Resilient and Efficient BNNs on the Cutting Edge

Speaker: Mikail Yayla

Abstract: BNNs can be optimized for high error resilience. We explore how this can be exploited in the design of efficient hardware for BNNs, by using emerging computing paradigms, such as in-memory and approximate computing.

AI for Processes: Powered by Process Mining

Abstract - Process mining has quickly become a standard way to analyze performance and compliance issues in organizations. Professor Wil van der Aalst, also known as “the godfather of process mining”, will explain what process mining is and reflect on recent developments in process and data science. The abundance of event data and the availability of powerful process mining tools make it possible to remove operational friction in organizations. Process mining reveals how processes behave "in the wild". Seemingly simple processes like Order-to-Cash (OTC) and Purchase-to-Pay (P2P), turn out to be much more complex than anticipated, and process mining software can be used to dramatically improve such processes. This requires a different kind of Artificial Intelligence (AI) and Machine Learning (ML). Germany is world-leading in process mining with the research done at RWTH and software companies such as Celonis. Process mining is also a beautiful illustration how scientific research can lead to innovations and new economic activity.

Biographie - Prof.dr.ir. Wil van der Aalst is a full professor at RWTH Aachen University, leading the Process and Data Science (PADS) group. He is also the Chief Scientist at Celonis, part-time affiliated with the Fraunhofer FIT, and a member of the Board of Governors of Tilburg University. He also has unpaid professorship positions at Queensland University of Technology (since 2003) and the Technische Universiteit Eindhoven (TU/e). Currently, he is also a distinguished fellow of Fondazione Bruno Kessler (FBK) in Trento, deputy CEO of the Internet of Production (IoP) Cluster of Excellence, co-director of the RWTH Center for Artificial Intelligence. His research interests include process mining, Petri nets, business process management, workflow automation, simulation, process modeling, and model-based analysis. Many of his papers are highly cited (he is one of the most-cited computer scientists in the world and has an H-index of 159 according to Google Scholar with over 119,000 citations), and his ideas have influenced researchers, software developers, and standardization committees working on process support. He previously served on the advisory boards of several organizations, including Fluxicon, Celonis, ProcessGold/UiPath, and aiConomix. Van der Aalst received honorary degrees from the Moscow Higher School of Economics (Prof. h.c.), Tsinghua University, and Hasselt University (Dr. h.c.). He is also an IFIP Fellow, IEEE Fellow, ACM Fellow, and an elected member of the Royal Netherlands Academy of Arts and Sciences, the Royal Ho

more...

We are very happy to announce that Pascal Jörke and Christian Wietfeld from project A4 have received the "2nd Place Best Paper Award" for the paper "How Green Networking May Harm Your IoT Network: Impact of Transmit Power Reduction at Night on NB-IoT Performance" at the IEEE World Forum on Internet of Things (WF-IoT) 2021.

The paper is a joint work of the Collaborative Research Center (SFB 876) and PuLS project. Longterm measurements of NB-IoT signal strength in public cellular networks have shown that at night in some cases base stations reduce their transmit power, which leads to a significant performance decrease in latency and energy efficiency by up to a factor of 4, having a substantial impact on battery-powered IoT devices and therefore should be avoided.

While green networking saves energy and money on base station sites, the impact on IoT devices must also be studied. Signal strength measurements show that in NB-IoT networks base stations reduce the transmit power at night or even shut-off, forcing NB-IoT devices to switch in remaining cells with worse signal strength. Therefore, this paper analyses the impact of downlink transmission power reduction at night on the latency, energy consumption, and battery lifetime of NB-IoT devices. For this purpose, extensive latency and energy measurements of acknowledged NB-IoT uplink data transmissions have been performed for various signal strength values. The results show that devices experience increased latency by up to a factor of 3.5 when transmitting at night, depending on signal strength. In terms of energy consumption, a single data transmission uses up to 3.2 times more energy. For a 5 Wh battery, a weak downlink signal at night reduces the device battery lifetime by up to 4 years on a single battery. Devices at the cell edge may even lose the cell connectivity and enter a high power cell search state, reducing the average battery lifetime of these devices to as low as 1 year. Therefore, transmit power reduction at night and cell shut-offs should be minimized or avoided for NB-IoT networks.

Reference: P. Jörke, C. Wietfeld, "How Green Networking May Harm Your IoT Network: Impact of Transmit Power Reduction at Night on NB-IoT Performance", In 2021 IEEE 7th World Forum on Internet of Things (WF-IoT), New Orleans, USA, Juni 2021. [pdf][video]

more...

Using Logic to Understand Learning

Abstract - A fundamental question in Deep Learning today is the following: Why do neural networks generalize when they have sufficient capacity to memorize their training set. In this talk, I will describe how ideas from logic synthesis can help answer this question. In particular, using the idea of small lookup tables, such as those used in FPGAs, we will see if memorization alone can lead to generalization; and then using ideas from logic simulation, we will see if neural networks do in fact behave like lookup tables. Finally, I’ll present a brief overview of a new theory of generalization for deep learning that has emerged from this line of work.

Biography - Sat Chatterjee is an Engineering Leader and Machine Learning Researcher at Google AI. His current research focuses on fundamental questions in deep learning (such as understanding why neural networks generalize at all) as well as various applications of ML (such as hardware design and verification). Before Google, he was a Senior Vice President at Two Sigma, a leading quantitative investment manager, where he founded one of the first successful deep learning-based alpha research groups on Wall Street and led a team that built one of the earliest end-to-end FPGA-based trading systems for general-purpose ultra-low latency trading. Prior to that, he was a Research Scientist at Intel where he worked on microarchitectural performance analysis and formal verification for on-chip networks. He did his undergraduate studies at IIT Bombay, has a PhD in Computer Science from UC Berkeley, and has published in the top machine learning, design automation, and formal verification conferences.

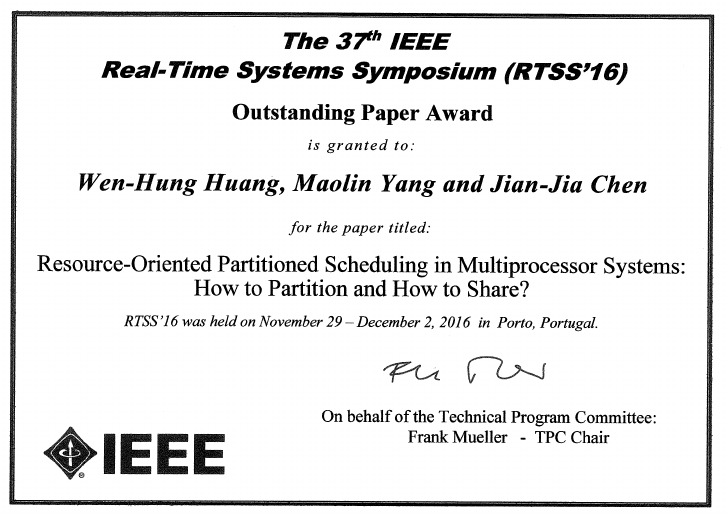

SFB board member and co-project leader of SFB subprojects A1 and A3 Prof. Dr. Jian Jia Chen is General Chair of this year's IEEE Real-Time Systems Symposium (RTSS) from December 7 to 10. The RTSS is the leading conference in the field of real-time systems and provides a forum of exchange and collaboration for researchers and practitioners. The focus hereby lies on theory, design, analysis, implementation, evaluation, and experience concerning real-time systems. This year, the four-day hybrid event, which includes scientific presentations, an Industry Session, a Hot Topic Day, and an Open Demo Session, will be held in Dortmund, Germany.

more...

Learning a Fair Distance Function for Situation Testing

Abstract - Situation testing is a method used in life sciences to prove discrimination. The idea is to put similar testers, who only differ in their membership to a protected-by-law group, in the same situation such as applying for a job. If the instances of the protected-by-law group are consistently treated less favorably than their non-protected counterparts, we assume discrimination occurred. Recently, data-driven equivalents of this practice were proposed, based on finding similar instances with significant differences in treatment between the protected and unprotected ones. A crucial and highly non-trivial component in these approaches, however, is finding a suitable distance function to define similarity in the dataset. This distance function should disregard attributes irrelevant for the classification, and weigh the other attributes according to their relevance for the label. Ideally, such a distance function should not be provided by the analyst but should be learned from the data without depending on external resources like Causal Bayesian Networks. In this paper, we show how to solve this problem based on learning a Weighted Euclidean distance function. We demonstrate how this new way of defining distances improves the performance of current situation testing algorithms, especially in the presence of irrelevant attributes.

Short bio - Daphne Lenders is a PhD researcher at the University of Antwerp where she, under the supervision of prof. Toon Calders studies fairness in Machine Learning. Daphne is especially interested in the requirements of fair ML algorithms, not just from a technical-, but also from a legal and usability perspective. Her interest in ethical AI applications already developed in her Masters, where she dedicated her thesis to the topic of explainable AI.

Sebastian Buschjäger has published the software "Fastinference". It is a model optimizer and model compiler that generates the optimal implementation for a model and a hardware architecture. It supports classical machine learning methods like decision trees and random forests as well as modern deep learning architectures.

more...

Bayesian Data Analysis for quantitative Magnetic Resonance Fingerprinting

Abstract - Magnetic Resonance Imaging (MRI) is a medical imaging technique which is widely used in clinical practice. Usually, only qualitative images are obtained. The goal in quantitative MRI (qMRI) is a quantitative determination of tissue- related parameters. In 2013, Magnetic Resonance Fingerprinting (MRF) was introduced as a fast method for qMRI which simultaneously estimates the parameters of interest. In this talk, I will present main results of my PhD thesis in which I applied Bayesian methods for the data analysis of MRF. A novel, Bayesian uncertainty analysis for the conventional MRF method is introduced as well as a new MRF approach in which the data are modelled directly in the Fourier domain. Furthermore, results from both MRF approaches will be compared with regard to various aspects.

Biographie - Selma Metzner studied Mathematics at Friedrich-Schiller-University in Jena. She then started her PhD at PTB Berlin and successfully defended her thesis in September 2021. Currently she is working on a DFG project with the title: Bayesian compressed sensing for nanoscale chemical mapping in the mid- infrared regime.

GraphAttack+MAPLE: Optimizing Data Supply for Graph Applications on In-Order Multicore Architectures

Abstract - Graph structures are a natural representation for data generated by a wide range of sources. While graph applications have significant parallelism, their pointer indirect accesses to neighbor data hinder scalability. A scalable and efficient system must tolerate latency while leveraging data parallelism across millions of vertices. Existing solutions have shortcomings; modern OoO cores are area- and energy-inefficient, while specialized accelerator and memory hierarchy designs cannot support diverse application demands.In this talk we will describe a full-stack data supply approach, GraphAttack, that accelerates graph applications on in-order multi-core architectures by mitigating latency bottlenecks. GraphAttack's compiler identifies long-latency loads and slices programs along these loads into Producer/Consumer threads to map onto pairs of parallel cores. A specialized hardware unit shared by each core pair, called Memory Access Parallel-Load Engine (MAPLE), allows tracking and buffering of asynchronous loads issued by the Producer whose data are used by the Consumer. In equal-area comparisons via simulation, GraphAttack outperforms OoO cores, do-all parallelism, prefetching, and prior decoupling approaches, achieving a 2.87x speedup and 8.61x gain in energy efficiency across a range of graph applications. These improvements scale; GraphAttack achieves a 3x speedup over 64 parallel cores. Our approach has been further validated on a dual-core FPGA prototype running applications with full SMP Linux, where we have demonstrated speedups of 2.35x and 2.27x over software-based prefetching and decoupling, respectively. Lastly, this approach has been taped out in silicon as part of a manycore chip design.

Short bio

Esin Tureci is an Associate Research Scholar in the Department of Computer Science at Princeton University, working with Professor Margaret Martonosi. Tureci works on a range of research problems in computer architecture design and verification including hardware-software co-design of heterogeneous systems targeting efficient data movement, design of efficient memory consistency model verification tools and more recently, optimization of hybrid classical-quantum computing approaches. Tureci has a PhD in Biophysics from Cornell University and has worked as a high-frequency algorithmic trader prior to her work in Computer Science.

www.cs.princeton.edu/

Aninda Manocha is currently a Computer Science PhD student at Princeton University advised by Margaret Martonosi. Her broad area of research is computer architecture, with specific interests in data supply techniques across the computing stack for graph and other emerging applications with sparse memory access patterns. These techniques span hardware-software co-designs and memory systems. She received her B.S. degrees in Electrical and Computer Engineering and Computer Science from Duke University in 2018 and is a recipient of the NSF Graduate Research Fellowship.

Marcelo Orenes Vera is a PhD candidate in the Department of Computer Science at Princeton University advised by Margaret Martonosi and David Wentzlaff. He received his BSE from University of Murcia. Marcelo is interested in hardware innovations that are modular, to make SoC integration practical. His research focuses on Computer Architecture, from hardware RTL design and verification to software programming models of novel architectures.He has previously worked in the hardware industry at Arm, contributing to the design and verification of three GPU projects. At Princeton, he has contributed in two academic chip tapeouts that aims to improve the performance, power and programmability of several emerging workflows in the broad areas of Machine Learning and Graph Analytics.

more...

The Stanford Graph Learning Workshop on September 16, 2021 will feature two talks from SFB 876. Matthias Fey and Jan Eric Lenssen, from subprojects A6 and B2, will each give a talk about their work on Graph Neural Networks (GNNs). Matthias Fey will talk about his now widely known and used GNN software library PyG (PyTorch Geometric) and its new functionalities in the area of heterogeneous graphs. Jan Eric Lenssen gives an overview of applications of Graph Neural Networks in the areas of computer vision and computer graphics.

Registration to participate in the livestream is available at the following link:

https://www.eventbrite.com/e/stanford-graph-learning-workshop-tickets-167490286957

In a discussion on the topic "Artificial Intelligence: Cutting-edge Research and Applications from NRW", Prof. Dr. Katharina Morik, Head of the Chair of Artificial Intelligence and speaker of the Collaborative Research Center 876, reported live at TU Dortmund University on the research field of Artificial Intelligence and, among other things, on the CRC 876. She hereby explained why Machine Learning is important for securing Germany's future. Participants of the virtual event were able to join in on the live discussion.

A recording of the event is available online!

more...

Runtime and Power-Demand Estimation for Inference on Embedded Neural Network Accelerators

Abstract - Deep learning is an important method and research area in science in general and in computer science in particular. Following the same trend, big companies such as Google implement neural networks in their products, while many new startups dedicate themselves to the topic. The ongoing development of new techniques, caused by the successful use of deep learning methods in many application areas, has led to neural networks becoming more and more complex. This leads to the problem that applications of deep learning are often associated with high computing costs, high energy consumption, and memory requirements. General-purpose hardware can no longer adapt to these growing demands, while cloud-based solutions can not meet the high bandwidth, low power, and real-time requirements of many deep learning applications. In the search for embedded solutions, special purpose hardware is designed to accelerate deep learning applications in an efficient manner, many of which are tailored for applications on the edge. But such embedded devices have typically limited resources in terms of computation power, on-chip memory, and available energy. Therefore, neural networks need to be designed to not only be accurate but to leverage such limited resources carefully. Developing neural networks with their resource consumption in mind requires knowledge about these non-functional properties, so methods for estimating the resource requirements of a neural network execution must be provided. Featuring this idea, the presentation presents an approach to create resource models using common machine learning methods like random forest regression. Those resource models aim at the execution time and power requirements of artificial neural networks which are executed on an embedded deep learning accelerator hardware. In addition, measurement-based evaluation results are shown, using an Edge Tensor Processing Unit as a representative of the emerging hardware for embedded deep learning acceleration.

Judith about herself - I am one of the students who studied at the university for a long time and with pleasure. The peaceful humming cips of Friedrich-Alexander University Erlangen-Nuremberg were my home for many years (2012-2020). During this time, I took advantage of the university's rich offerings by participating in competitions (Audi Autonomous Driving Cup 2018, RuCTF 2020, various ICPCs), working at 3 different chairs (Cell Biology, Computer Architecture, Operating Systems) as a tutor/research assistant, not learning two languages (Spanish, Swahili), and enjoying the culinary delights of the Südmensa. I had many enjoyable experiences at the university, but probably one of the best was presenting part of my master's thesis in Austin, Texas during the 'First International Workshop on Benchmarking Machine Learning Workloads on Emerging Hardware' in 2020. After graduation, however, real-life caught up with me and now I am working as a software developer at a company with the pleasant name 'Dr. Schenk GmBH' in Munich where I write fast and modern C++ code.

Github: Inesteem

LinkedIn: judith-hemp-b1bab11b2

Learning in Graph Neural Networks

Abstract - Graph Neural Networks (GNNs) have become a popular tool for learning representations of graph-structured inputs, with applications in computational chemistry, recommendation, pharmacy, reasoning, and many other areas. In this talk, I will show some recent results on learning with message-passing GNNs. In particular, GNNs possess important invariances and inductive biases that affect learning and generalization. We relate these properties and the choice of the “aggregation function” to predictions within and outside the training distribution.

This talk is based on joint work with Keyulu Xu, Jingling Li, Mozhi Zhang, Simon S. Du, Ken-ichi Kawarabayashi, Vikas Garg and Tommi Jaakkola.

Short bio - Stefanie Jegelka is an Associate Professor in the Department of EECS at MIT. She is a member of the Computer Science and AI Lab (CSAIL), the Center for Statistics, and an affiliate of IDSS and the ORC. Before joining MIT, she was a postdoctoral researcher at UC Berkeley, and obtained her PhD from ETH Zurich and the Max Planck Institute for Intelligent Systems. Stefanie has received a Sloan Research Fellowship, an NSF CAREER Award, a DARPA Young Faculty Award, a Google research award, a Two Sigma faculty research award, the German Pattern Recognition Award and a Best Paper Award at the International Conference for Machine Learning (ICML). Her research interests span the theory and practice of algorithmic machine learning.

Fighting Temperature: The Unseen Enemy for Neural processing units (NPUs)

Abstract - Neural processing units (NPUs) are becoming an integral part in all modern computing systems due to their substantial role in accelerating neural networks. In this talk, we will discuss the thermal challenges that NPUs bring, demonstrating how multiply-accumulate (MAC) arrays, which form the heart of any NPU, impose serious thermal bottlenecks to any on-chip systems due to their excessive power densities. We will also discuss how elevated temperatures severely degrade the reliability of on-chip memories, especially when it comes to emerging non-volatile memories, leading to bit errors in the neural network parameters (e.g., weights, activations, etc.). In this talk, we will also discuss: 1) the effectiveness of precision scaling and frequency scaling (FS) in temperature reductions for NPUs and 2) how advanced on-chip cooling using superlattice thin-film thermoelectric (TE) open doors for new tradeoffs between temperature, throughput, cooling cost, and inference accuracy in NPU chips.

Short bio - Dr. Hussam Amrouch is a Junior Professor at the University of Stuttgart heading the Chair of Semiconductor Test and Reliability (STAR) as well as a Research Group Leader at the Karlsruhe Institute of Technology (KIT), Germany. He earned in 06.2015 his Ph.D. degree in Computer Science (Dr.-Ing.) from KIT, Germany with distinction (summa cum laude). After which, he has founded and led the “Dependable Hardware” research group at KIT. Dr. Amrouch has published so far 115+ multidisciplinary publications, including 43 journals, covering several major research areas across the computing stack (semiconductor physics, circuit design, computer architecture, and computer-aided design). His key research interests are emerging nanotechnologies and machine learning for CAD. Dr. Amrouch currently serves as Associate Editor in Integration, the VLSI Journal as well as a guest and reviewer Editor in Frontiers in Neuroscience.

more...

Improving Automatic Speech Recognition for People with Speech Impairment

Abstract - The accuracy of Automatic Speech Recognition (ASR) systems has improved significantly over recent years due to increased computational power of deep learning systems and the availability of large training datasets. Recognition accuracy benchmarks for commercial systems are now as high as 95% for many (mostly typical) speakers and some applications. Despite these improvements, however, recognition accuracy of non-typical and especially disordered speech is still unacceptably low, rendering the technology unusable for the many speakers who could benefit the most from this technology.

Google’s Project Euphonia aims at helping people with atypical speech be better understood. I will give an overview to our large-scale data collection initiative, and present our research on both effective and efficient adaptation of standard-speech ASR models to work well for a large variety and severity of speech impairments.

Short bio - Katrin earned her Ph.D. from University of Dortmund, supervised by Prof Katharina Morik and Prof Udo Hahn (FSU Jena), in 2010. She has since worked on a variety of NLP, Text Mining, and Speech Processing projects, including eg Automated Publication Classification and Keywording for the German National Library, Large-Scale Patent Classification for the European Patent Office, Sentiment Analysis and Recommender Systems at OpenTable, Neural Machine Translation at Google Translate. Since 2019, Katrin leads the research efforts on Automated Speech Recognition for impaired speech within Project Euphonia, an AI4SG initiative within Google Research.

In her free time, Katrin can be found exploring the beautiful outdoors of the Bay Area by bike or kayak.

Random and Adversarial Bit Error Robustness for Energy-Efficient and Secure DNN Accelerators

Abstract - Deep neural network (DNN) accelerators received considerable attention in recent years due to the potential to save energy compared to mainstream hardware. Low-voltage operation of DNN accelerators allows to further reduce energy consumption significantly, however, causes bit-level failures in the memory storing the quantized DNN weights. Furthermore, DNN accelerators have been shown to be vulnerable to adversarial attacks on voltage controllers or individual bits. In this paper, we show that a combination of robust fixed-point quantization, weight clipping, as well as random bit error training (RandBET) or adversarial bit error training (AdvBET) improves robustness against random or adversarial bit errors in quantized DNN weights significantly. This leads not only to high energy savings for low-voltage operation as well as low-precision quantization, but also improves security of DNN accelerators. Our approach generalizes across operating voltages and accelerators, as demonstrated on bit errors from profiled SRAM arrays, and achieves robustness against both targeted and untargeted bit-level attacks. Without losing more than 0.8%/2% in test accuracy, we can reduce energy consumption on CIFAR10 by 20%/30% for 8/4-bit quantization using RandBET. Allowing up to 320 adversarial bit errors, AdvBET reduces test error from above 90% (chance level) to 26.22% on CIFAR10.

References:

https://arxiv.org/abs/2006.13977

https://arxiv.org/abs/2104.08323

Short bio -David Stutz is a PhD student at the Max Planck Institute for Informatics supervised by Prof. Bernt Schiele and co-supervised by Prof. Matthias Hein from the University of Tübingen. He obtained his bachelor and master degrees in computer science from RWTH Aachen University. During his studies, he completed an exchange program with the Georgia Institute of Technology as well as several internships at Microsoft, Fyusion and Hyundai MOBIS, among others. He wrote his master thesis at the Max Planck Institute for Intelligent Systems supervised by Prof. Andreas Geiger. His PhD research focuses on obtaining "robust" deep neural networks, e.g., considering adversarial examples, corrupted examples or out-of-distribution examples. In a collaboration with IBM Research, his recent work improves robustness against bit errors in (quantized) weights to enable energy-efficient and secure accelerators. He received several awards and scholarships including a Qualcomm Innovation Fellowship, RWTH Aachen University's Springorum Denkmünze and the STEM Award IT sponsored by ZF Friedrichshafen. His work has been published at top venues in computer vision and machine learning including CVPR, IJCV, ICML and MLSys.

Please follow the link below to register to attend the presentation.

more...

Andrea Bommert successfully defended her dissertation entitled "Integration of Feature Selection Stability in Model Fitting" on January 20, 2021. She developed measures for assessing variable selection stability as well as strategies for fitting good models using variable selection stability and applied them successfully.

The members of the doctoral committee were Prof. Dr. Jörg Rahnenführer (supervisor and first reviewer), Prof. Dr. Claus Weihs (second reviewer), Prof. Dr. Katja Ickstadt (examination chair), and Dr. Uwe Ligges ( minutes).

Andrea Bommert is a research assistant at the Faculty of Statistics and a member of the Collaborative Research Center 876 (Project A3).

We cordially congratulate her on completing her doctorate!

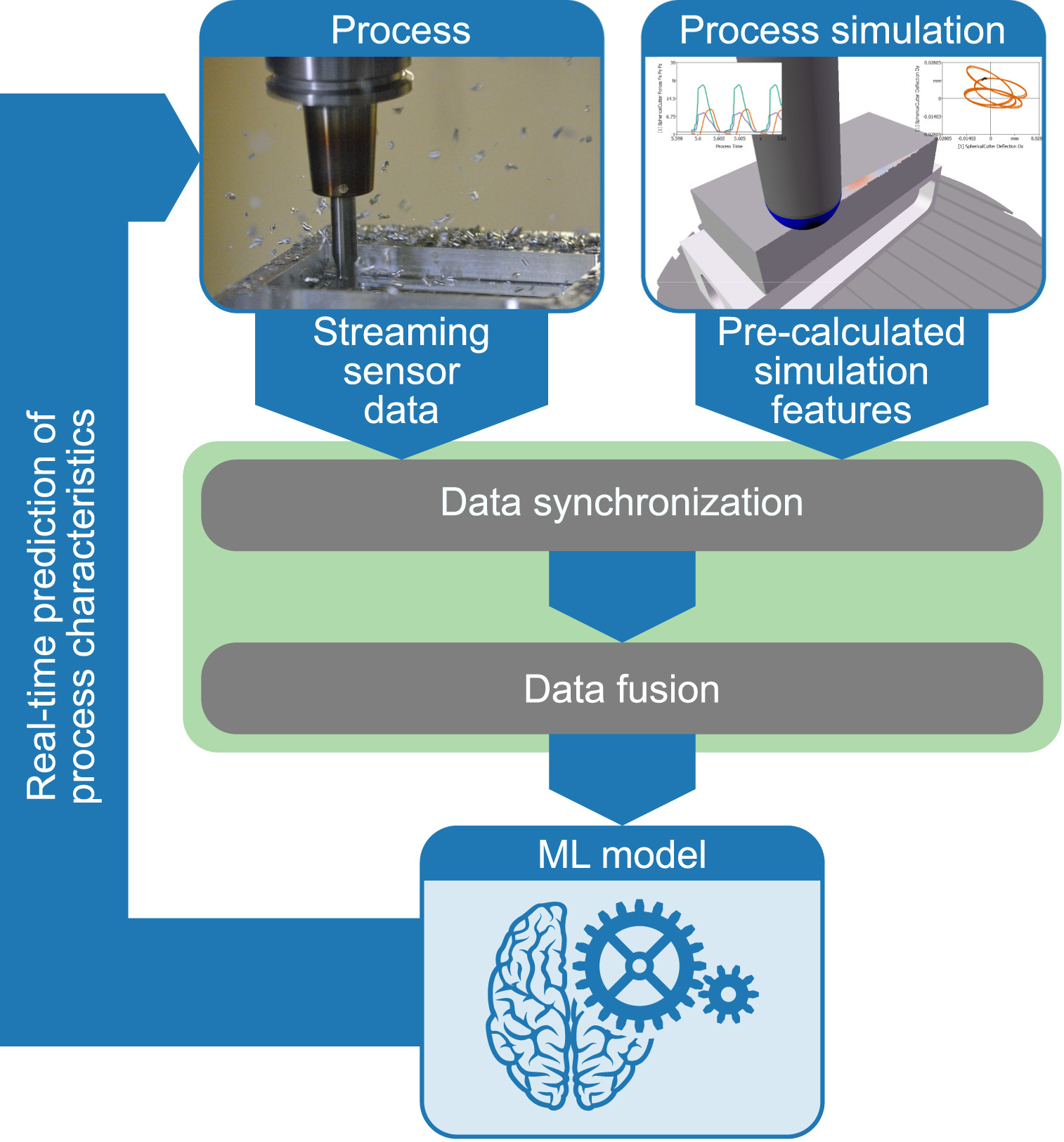

Jacqueline Schmitt from project B3 successfully defended her dissertation titled "Methodology for process-integrated inspection of product quality by using predictive data mining techniques" on February 04, 2021. The verbal examination took place in digital form. The results of the dissertation were presented in a public 45-minute lecture on Zoom. The examination committee was formed by Prof. Dr.-Ing. Andreas Menzel (examination chairman), Prof. Dr.-Ing. Jochen Deuse (rapporteur), Dr.-Ing. Ralph Richter (co-rapporteur) and Prof. Dr. Claus Weihs (co-examiner).

We cordially congratulate her on completing her doctorate!

Abstract of the thesis -- In the conflicting areas of productivity and customer satisfaction, product quality is becoming increasingly important as a competitive factor for long-term market success. In order to simultaneously counter the steadily increasing cost pressure on the market, this means the consistent concentration on internal company processes that influence quality, in particular to reduce technology-related output losses as well as defect and inspection costs. An essential prerequisite for this, in addition to defect prevention and avoidance, is the early detection of deviations as the basis for process-integrated quality control. Increasingly, growing demands for safety, accuracy and robustness are counteracting the speed and flexibility required in the production process, so that a process-integrated inspection of quality-relevant characteristics can only be carried out to a limited extent using conventional methods of production measurement technology. This implies that quality deviations are not immediately recognised and considerable productivity losses can occur.